Why do we want to blame individuals for the failures of our systems?

Once again, we read about the failure of a large system. For over six hours at the beginning of October, Facebook was hit by a total outage. WhatsApp and Instagram were also cut off from the Internet.

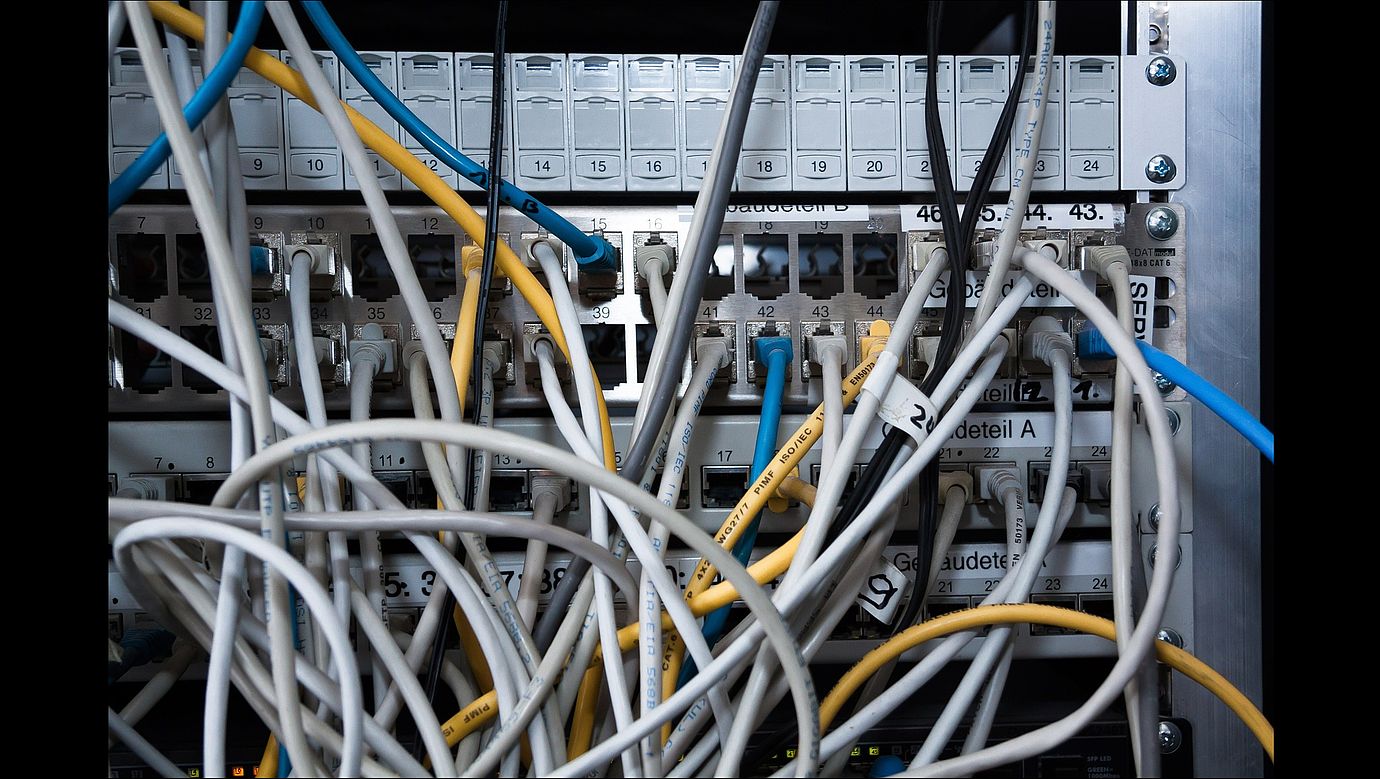

According to Internet experts, the crash was caused by human error. It is said to have been a faulty update of a Border Gateway Protocol (BGP). This apparently works like a navigation system, scanning the Internet for addresses and forwarding this information to the desired locations. Please don't ask me anymore. I'm just relaying network know-how from experts here, rehashed for dummies. If BGP doesn't work properly, a single company, an entire country, or an entire continent can be cut off from the Internet. According to the experts, only a few employees have access to the update mechanism of a BGP. Only a very few are authorized to make changes to the navigation system.

How would you react as the person in charge?

Let's assume it was a single network engineer or programmer who had disconnected the backbone system of the now 1,000 billion company Facebook from the network. And imagine you were disciplinary superior to these employees. What would go through your mind? In view of the enormous damage, would you be offended if you suspected that your team members were not working with the required diligence? That you therefore accuse them of not having done a good job? You would probably feel the same as the numerous experts who have come to the conclusion that this was once again a case of human error.

Why is it, I ask you (and all the experts), that your perception is reduced to the human factor and completely ignores the highly complex, but misguided system? A system that only a few still understand. A system that experts are not able to convey to us in page-long explanations. Not even when they try to speak in simple language and use metaphors and analogies that clearly signal to us that we have lost the plot and should better concern ourselves with other things.

But that is exactly what we should not do! We should stand up and fight back against such vulnerable and fragile systems. Just because a system is not a human being does not mean that we do not take it into account in our evaluation and only see the human being with its fallibility. A system also deserves recognition and thus an indictment. It must not get off scot-free.

It is not, on the face of it, that a human being has failed in this case. It is the poor performance of an ill-conceived system in which gross design flaws and technical omissions allow unacceptable failures. It is a system in which humans are assigned a totally inadequate role. Or have we forgotten that humans are fallible?

Why only do we want to blame individuals for the failures of our systems?

In answering this question, I would like to address two hypotheses and know that they alone cannot answer it conclusively. The mechanisms are too complex for there to be a single, 'correct' story to explain them.

Power

The obvious way to explain this inappropriate blaming of individuals is that it involves the raw exertion of power. In any failure of a highly complex system, there are people, organizations, or institutions that seek to defend their goods, their services, or their reputations. They do so by sacrificing individual employees or leaders in the event of an incident. This suggests that it is the protection of such interests that imposes guilt on, or in certain cases criminalizes, the actions of individuals. The idea is thus to divert attention from poorly set-up systems for which executives would have to bear responsibility. It is obvious that this can be a gross insinuation in a specific case. We all know that it is practiced.

Organizations in which such practices are found suffer internally from a culture of mistrust. If such practices become public, there is inevitably a loss of trust among customers and all other stakeholders. If such companies want to bring their organizational and systemic risks under control, they have a long way to go. It begins with the abandonment of the tube view of human fallibility. It requires the opening of perception to a systemic perspective. This is an unpleasant process for which it is worthwhile to have a change agent, a coach, at your side. Whenever it comes to overcoming intuitively triggered reactions (blaming individuals), it becomes tough and it needs perseverance and patience. This is the path that leads to reliability, safety and resilience of the organization and its goods and services.

Anxiety

Today, when we read the report of an air accident investigation written by a professional authority, we realize that there is not one reason for disasters. This is also true for disasters that occur in other highly complex sociotechnical systems. Rather, dozens of factors have an impact and are partly responsible for failure. Only through their simultaneous occurrence or action can the accident occur. This simultaneous interaction is, we must admit, as painful as it may be, pure coincidence. The complexity of today's aviation system, for example, is so immense that the fluctuations of its individual functions can lead to catastrophes, even though everyone has done everything right. This is a frightening insight, and it is scary.

This fear is rooted in the fact that we no longer have total control over the complex systems we design and operate. We fear the possibility that they may fail because of the intertwined, everyday interactions that take place within them. It would be much easier for us, and we would very much welcome it, if such system failures had a single, traceable and controllable cause. So, when it comes to finding the cause, we're not picky; everything doesn't necessarily have to be on the table. Because that would be scary. We would be made aware that we are dealing with a loss of control. How reassuring it is to tell ourselves that it was once again human error. Assigning blame to individuals, punishing them and thus criminalizing them is therefore also a protective mechanism that saves us from having to face reality with its terrible grimace.

Why do we assume that our systems are okay?

As we have seen, our IT experts come to the (self-)reassuring conclusion in their analysis of the Facebook total failure that it must have been human error. How unbearable it would be for them to have to admit to themself that they are specialists in an industry that produces such ailing and unreliable systems. What would be called for would be modesty and reverence for what they had helped to build.

Pay attention the next time you hear about an accident or a serious incident in your environment. What does the press, the company involved, or your intuition present to you as the cause? If it is once again a scapegoat, pay attention to the calming aura of this assumption. At the same time observe how the system in which the incident occurred does not receive any attention and that it can continue its flawed existence unmolested in the shadow of the guilty individual's accusation.